Some people think we’re about to enter the next AI Winter because the interest in AI declined over the last few months. But is it really true?

Let's rewind the tape for a moment.

People talked about AI more in the past 12 months than in the previous 12 years. Pre-Stable Diffusion AI was alien to most folks. Sure, it was there in recommendation algorithms and voice assistants and when you talked to your phone to ask directions, but it wasn't something most people actively thought about – they just used it.

Stable Diffusion changed the public perception dramatically: for the first time, many people saw a “naked” AI – you give it a text, it gives an image. Nothing more. It wasn’t the first model of this kind. DALLE was already available, but it had very limited capabilities and most people couldn’t really get their hands on it. SD became the first widely adopted model because anyone with a decent consumer-level GPU could run it locally and also because of generous trials in Dream Studio.

As much as disruptive Stable Diffusion was back then, it’s still a use-case-specific model: it allows you to create and modify images but hardly does anything else. So, many people had fun with it, and it sparked a lot of creativity and even more discussions about AI, but there are only so many use cases for a text-2-image model.

But ChatGPT was a special general-purpose piece of magic. There had been a lot of chatbots but this was different. It felt like chatting to a real flesh-and-blood buddy. And it was free. Seriously, this was one of the best marketing moves I have ever seen in my life: giving out something THAT powerful for free with such a low barrier to entry was absolutely genius. GPTs were out there for a while already, but very few people cared about them; but when your entire Twitter and LinkedIn feed is talking about this thing, and you can use it for free, you’ll likely go and give it a try. At the very least, out of curiosity.

I get it; you're probably thinking, "Tell me something I don't know." But bear with me: here comes an interesting part.

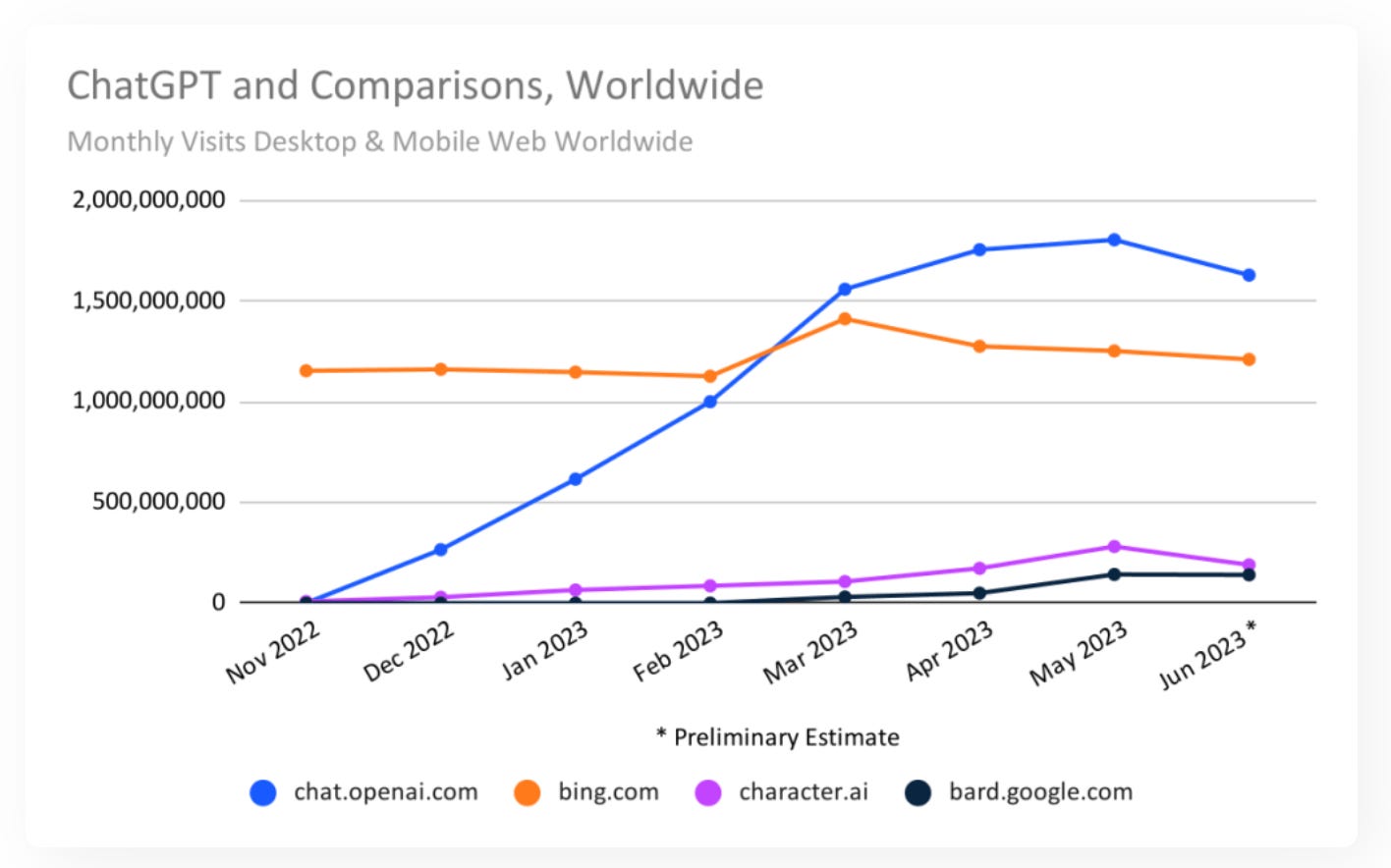

The ChatGPT site was booming – each month from November to May saw more traffic than the last. It wasn't just a fad; it was a utility for personal questions, for business tasks, for everything in between. But then the traffic began to dip.

Some naysayers jumped on this like a cat on a laser pointer, touting the declining traffic as definitive proof that AI is overhyped and useless. "There you have it," they said, "ChatGPT's decline proves these LLMs are a waste of time and will soon be forgotten."

But that’s utter bullshit, and I’m going to tell you why.

The adoption of generative AI is moving in stages (as with any other technology, and frankly, much faster than the majority of inventions before). There is a journey.

At the first stop on this journey, you've got the people who've taken ChatGPT or Stable Diffusion out for a spin. Many of them had a good time, but for most, it didn't turn into a lasting relationship.

A slice of those early dabblers took things to the next level – incorporating AI into their everyday grind. We've got "secret cyborgs" among us – employees sneaking generative AI into their workflow despite whatever company policies might say. For them, the time-saving perks outweigh any risks.

Sharing this second step are the developers, who gladly adopt GitHub Copilot and ChatGPT to speed up the most boring and annoying parts of the development process, like generating the boilerplate code or figuring out why this damn library doesn’t work as intended.

But here is the thing – the path doesn't hit a dead-end at step two!

There are at least two next steps, and I don’t know how many more beyond that.

On the third step, the talented and curious developers are building their own AI-driven tools. Some of them do that for themselves or the community, building PDF readers, language tutors, game masters, and whatnot. Others build tools for internal organization use. In one of my previous posts, I shared how I was implementing a newsletter assistant. It’s currently available on GitHub, and believe me, it saves us a tremendous amount of time when preparing our weekly newsletter.

Think these builders are rare? Think again. Just look at LangChain, a popular framework for interacting with LLMs. Its GitHub star rating is through the roof at over 60,000. Plus, it has an incredibly engaged developer community of 31,000 strong and a whopping 13 million-plus downloads. And remember, LangChain is just one tool in an ever-expanding toolbox.

So, what's the next station on this journey? Some of these intelligent apps graduate from the development phase to become full-blown SaaS products. And trust me, the volume here is staggering.

Aggregator platforms are sprouting up to catalog these tools, and one of the big ones is "There's an AI for That". At last count, it showcased a whopping 7,000-plus AI-powered apps of every stripe. And get this – most have sprung up like wildflowers after a spring rain just in the last few months.

And this leads us to the inevitable next leap – a brave cohort of early adopters putting these remarkable tools to work, ushering in a new era of AI-driven capabilities.

Take a minute to mull this over: would you rather explain your marketing tasks to ChatGPT every single time or would you prefer the convenience of a couple of clicks? ChatGPT is a powerhouse, no doubt, especially when you add plugins into the mix. But there's a ceiling to its convenience. You might soon find yourself managing an unwieldy collection of prompts and enduring the tedium of copy-pasting them for every similar task.

As your needs crystallize, automation and simplicity become less of a want and more of a need. This is the very reason why most of us lean towards a graphical user interface (GUI) over a command-line interface (CLI) for day-to-day tasks. No cheat sheets, no painstaking assembly of commands – just a few button clicks, and you're done, minimizing both time spent and error rates.

Think of it this way: these AI-powered apps are to ChatGPT what a sleek graphical user interface is to a clunky command-line interface. It's a total user-experience transformation.

So, let's settle this once and for all: people aren't using less AI; they're just using it differently. Instead of chitchatting with ChatGPT, users are gravitating towards bespoke tools with user-friendly interfaces. It’s that straightforward.

So let's drop the doom and gloom, okay? The idea that we're headed into another "AI winter" or that AI isn't living up to its hype is simply not the case. We're just getting started on this journey, and trust me, the road ahead is long but promising.